Hey RL Reading Group,

Hope you enjoyed your spring break! Here we are to remind you about reinforcement learning again.

Recap

This month we had two meetings (technically three, but we included the February 1st meeting in the January newsletter). On the 16th, we discussed a talk by Sergey Levine on Offline RL + Behavior Cloning. On the 23rd, we discussed DIAYN, a paper from Ben Eysenbach+ in 2018. Below are recaps on both of them.

CQL

Initially, Nikhil planned to present Conservative Q-Learning, a paper from Aviral Kumar, Aurick Zhou, George Tucker, and Sergey Levine. But during the meeting, a vote was cast and the majority chose to watch and discuss a recently released video from Berkeley’s RAIL instead.

If you’re interested in CQL, some relevant sources are linked below. The main idea behind CQL is the formulation of a Q-function that lower bounds a policy’s value to mitigate error caused by distribution shift. The method has been quite popular as a baseline recently.

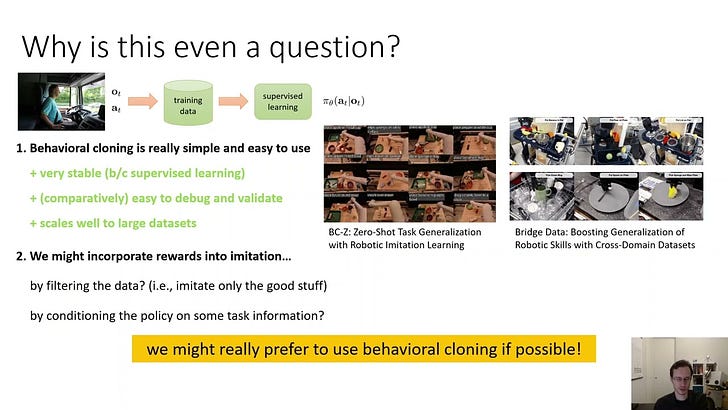

Offline RL + Behavior Cloning

The video we watched was also related to offline RL. It was a high-level overview that encapsulated techniques from the following papers:

RvS: What is Essential for Offline RL via Supervised Learning?

Offline Reinforcement Learning as One Big Sequence Modeling Problem

You may recall that we previously discussed this paper in November.

Deep Imitative Models for Flexible Infererence, Planning, and Control

ViKiNG: Vision-Based Kilometer-Scale Navigation with Geographic Hints

In the video, Sergey discusses the differences between offline RL and behavior cloning, along with answers to three questions:

Should I run behavioral cloning or offline RL if I have near-optimal data?

Can I get behavioral cloning to solve RL problems?

Can I somehow combine behavior cloning and RL?

Because we only got through the first two questions during our session, we’re posting a summary of his points for the third question here.

Can I somehow combine behavior cloning and RL?

Behavior cloning and RL can be combined most easily as a two step process:

Learn a density model of your input data

Run a planning algorithm (either at training or test time) using that model

A density model learns a distribution of the data given to an agent. In behavior cloning, you can often think of this as a distribution over the trajectories it’s given.

A planning algorithm, such as MPC or beam search, would then use that model to determine the best actions to take.

The examples he uses to illustrate this are papers 3,4, and 5 listed above. In the trajectory transformer paper, they learned a trajectory distribution and augmented a likelihood function based on this distribution to incorporate reward; this likelihood function was then passed into the beam search algorithm, which would find the best actions to take to maximize reward.

The imitative model paper, which was built for the autonomous driving domain, does something similar where they instead learn the distribution by fitting normalizing flows and minimizing a custom cost function. ViKiNG is a bit different in that it does perform BC to fit a policy, but it also provides a sequence of goals to perform graph search (via a variant of A*). You can get more details about these approaches in the video below. We don’t know why it’s so quiet, but hopefully you can hear it:

Here are some questions that came up during our discussion:

What is compositionality?

What is the intuitive difference between the two bounds at 14:30 in the video?

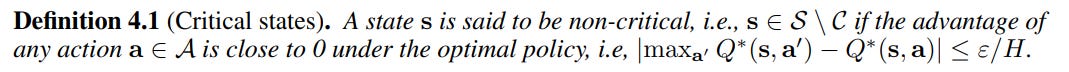

What’s some other work that makes use of the “critical state” concept illustrated at 16:00 in the video?

(1) This one is definitely confusing, especially for those who are familiar with the NLP literature. Compositionality, in principle, refers to the same meta-structure as it does there: composing individual, given elements to form a new, desired element. Grounding this to RL: compositionality refers to being able to form trajectories or policies that exhibit a desired behavior given a bunch of trajectories that each exhibit only parts of that desired behavior. As a very simple example, you can consider two trajectories that respectively go from location A to location B and location B to location C. If the desired behavior is a trajectory that goes from A to C, then you could compose the two given trajectories to arrive at this desired trajectory (this example is usually referred to as “stitching”).

(2) The H terms refer to continually incurred cost for the rest of the horizon because the taken action does not follow the optimal policy (cost for every mistake). In the alternative case (left side inequality), the H terms are gone because cost is only assigned at the timestep when the agent fell, not for any other timesteps.

(3) Surprisingly, from what we could gather, it seems like the critical state concept was formalized in the paper being discussed in the video. Definition 4.1 in Kumar et al. states the following:

Notice that the set of critical states is defined via its complement. Intuitively, this is just saying that if the approximate expected return of taking any other action is similar to the expected return of taking the optimal action, then clearly the state in question cannot be critical because there is more than one “good” action.

DIAYN

On February 23, Spencer Gable-Cook facilitated a discussion on Diversity is All You Need: Learning Skills Without a Reward Function by Eysenbach et al. This paper is actually on the “older” side of the RL literature now, dating all the way back to 2018.

The basic idea of this paper was to learn useful skills (i.e. action sequences used to accomplish a high level task) by forcing the agent towards more entropic solutions without specifying a reward function (so, in an unsupervised way).

Here’s the algorithm, pasted from their paper. You’re probably not going to get much out of it without reading further, so we encourage you to do so!

You can view the recording below, along with some other resources!:

Some questions that came up during our discussion:

Intuitively, how does maximizing entropy really help it learn skills? For example, it’s hard to imagine HalfCheetah learning to flip forward just by wanting to arrive in random states.

What work has built on or eclipsed DIAYN since then?

(1) It’s a bit fuzzy, but maybe you can imagine how by promoting diversity, skills need to be as distinguishable as possible from each other, and that random skills are indistinguishable. If you force the skills an agent develops to be distinguishable, then theoretically, they will arrive at some state that could not have been reached by any other skill (for example, the end of a continuous control task). Specifically, skills are distinguished by states — by maximizing the mutual information between skills and states, they ensure that each state effectively describes a skill that was used to reach it. Conversely, by minimizing the mutual information between skills and actions given state information, they ensure that states are the only information used to distinguish skills.

(2) This is a great question. While it requires a lot of knowledge and literature review to know how every single piece that has cited this paper has improved on it, we can at least identify those pieces by looking at Semantic Scholar. Most works, such as SLIDE, state that DIAYN is poorly equipped to discover more difficult skills and propose their own alternatives.

Administrivia

Next meeting

Since we’re back from the break and we don’t have anyone signed up, we’ll cancel this week. We’ll meet again on March 16th.

Discord server

Just as we stated last time, we have a Discord server!

Sign up to present

If a meme won’t convince you, we don’t know what will. Note that in the spreadsheet below we have a lot of presentation requests listed in the second tab in case you don’t know what to present on but still want to!

In and around RL

We promise that this isn’t a Sergey Levine fanpage, and that there are a ton of other researchers working on RL, along with applications in more diverse domains than just robotics. You’ll find a few below, plus some other cool resources:

Deep RL for nuclear research :) You *probably* can’t reproduce this one yourself…

A chapter on imitation learning from the cs237b (Principles of Robot Autonomy) lecture notes. This might be useful for people wanting to understand the basics of imitation learning, behavior cloning, DAgger, etc.

We’ve mentioned the Trajectory Transformer paper a bunch in this post. Remember the similar Decision Transformer paper? What if someone made it work for online RL? Yeah, they did:

A new method for constructing offline RL datasets (super, super necessary!)

Okay sorry, there is *one* Levine paper in this section; but it’s worth it because it’s further discussion of offline RL: How to Leverage Unlabeled Data in Offline Reinforcement Learning. You may notice that we also posted this one last month — we’re posting it here again because of its relevance to the topics we talked about this month. This one’s trippy: instead of learning a reward function (inverse RL style) from labeled data and applying it to unlabeled data, they simply just mark unlabeled data with zero reward. This seems like total clickbait, but this is actually what they do. If this bothers you, you should click the paper to find out why ;)

That’s all for this edition of the newsletter. Let us know if there’s anything you want to add for the next newsletter or anything other suggestions you have for us, and as always, sign up to present. See you soon!

Trying to clone productive behavior,

RL Reading Group Coordinators